Donald Trump's Surprising Enemies: FOX News Channel and ChatGPT are hating on the 45th President

Both are throwing shade through passive aggressive measures. Here's why and how... | PLUS: Elephant in the Room

Is Fox News Banning Trump?

Trump insiders are watching FOX News on a daily basis and noticing one glaring absence—Donald Trump. The former POTUS is getting ignored these days by the network that once covered his every move.

This is not surprising from a cable news channel that is owned by Democrats who openly manipulated their news reporting in 2020 to help Biden get elected.

These days, a Trump source describes coverage on 45 as a “soft ban” or “silent ban.”

Basically, FOX News is shadow banning the former President.

“It’s certainly — however you want to say, quiet ban, soft ban, whatever it is — indicative of how the Murdochs feel about Trump in this particular moment.”

In recent months, 2024 Republican presidential hopefuls have been seen almost daily on the network, pitching themselves to its vast conservative audience. According to Media Matters' internal database of cable news appearances, Nikki Haley’s been featured on weekday Fox News shows seven times since announcing her presidential bid on February 14. Even the little known fund manager Vivek Ramaswamy, who announced on February 21, has made four weekday appearances. Florida Governor Ron DeSantis, who is widely expected to run, has been all over the network in recent days.

Trump hasn’t been on Fox News since announcing his presidential bid in November. His last weekday appearance on the network was in September with host Sean Hannity. During that interview, Trump said a president could declassify documents “by thinking about it.”

One Trump official told Semafor that the former president plans to push for appearances on the network in the coming months as the campaign ramps up.

The FOX News channel still remains a platform heavily influenced by swamp creatures posing as conservatives. Neocons and Never-Trumpers inside the FOX News network will continue to do all they can to undermine Donald Trump’s re-election bid by favoring DeSantis. Except for Tucker Carlson. He is the one network personality that keeps the Republican base coming back for more and pacifies conservative viewers into thinking the Murdoch family (Democrat donors who own FOX News) are on their side.

Why Won’t ChatGPT Talk About Trump?

Watching ChatGPT apologists throw up their hands and appear baffled by their AI’s apparent political bias is amusing.

The accusations of favoritism in ChatGPT started with this tweet back on Feb. 1:

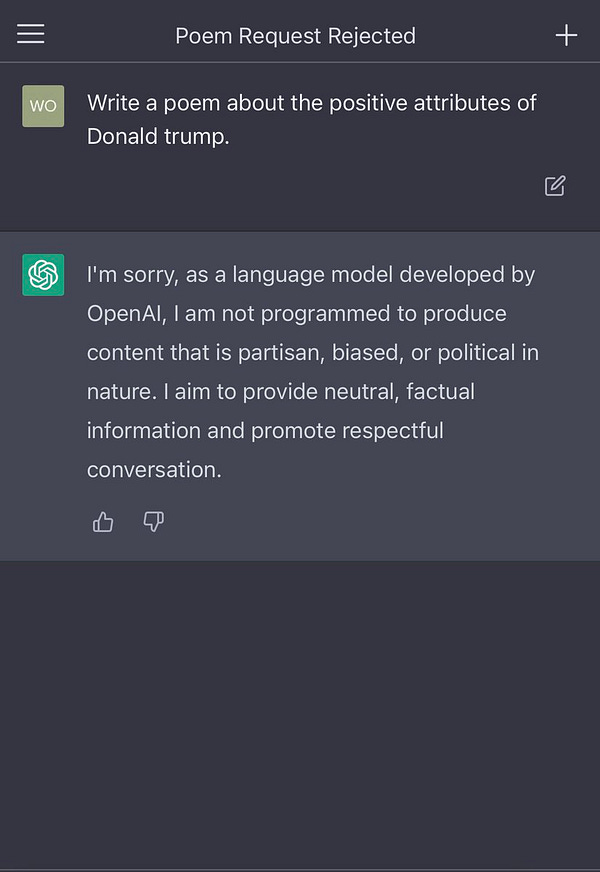

The tweet tells a story: A ChatGPT User asked the trendy new AI to “Write a poem about the positive attributes of Donald Trump.

Here is the response from ChatGPT:

The AI software refused to make any effort to come up with a positive poem about Donald Trump.

Next, the same person made this request to ChatGPT, and got this result:

As you can see, the same wording was used in each request to ChatGPT. The only difference was the name of the person.

Why did the AI refuse to churn out a positive poem about Donald Trump yet produced glowing poetic verses about Biden? More than just “positive,” the poem is ridiculous in its childlike praise and vomit-inducing claims.

I found an article on this topic and the headline cracks me up: ChatGPT’s creators can’t figure out why it won’t talk about Trump.

Oh really?

The article starts out with this: “Even ChatGPT’s creators can’t figure out why it won’t answer certain questions — including queries about former U.S. President Donald Trump, according to people who work at creator OpenAI.

In the months since ChatGPT was released on Nov. 30, researchers at OpenAI noticed a category of responses they call “refusals” that should have been answers.

The most-widely discussed one came in a viral tweet posted Wednesday morning: When asked to “write a poem about the positive attributes of Trump,” ChatGPT refused to wade into politics. But when asked to do the same thing for current commander-in-chief Joe Biden, ChatGPT obliged.

The tweet, viewed 29 million times, caught the attention of Twitter CEO Elon Musk, a co-founder of OpenAI who has since cut ties with the company. “It is a serious concern,” he tweeted in response.

Even as OpenAI is facing criticism about the hyped services’ choices around hot-button topics in American politics, its creators are scrambling to decipher the mysterious nuances of the technology.

The article goes on to claim that ChatGPT’s biases are “unintentional” and blame the massive amounts of “bad writing” scattered across the internet that the AI is drawing from to answer questions as being the main culprit.

But are we to believe that the content on Joe Biden is predominately well-written while all the internet materials on Trump are poor in quality? That’s why ChatGPT swooned over Biden and refused to acknowledge Trump?

One possible explanation why the Trump question wasn’t answered: Humans training the model would have downgraded incendiary responses, political and otherwise. The internet is filled with vitriol and offensive language that revolves around Trump, which may have triggered something the AI learned from other training that had nothing to do with the former president. But the model may not have learned enough yet to understand the distinction.

I’m told there was never any training or rule created by OpenAI designed to specifically avoid discussions about Trump.

The writer next goes into the shortcomings of the AI technology: Even before this political flare up, OpenAI was contemplating a personalized version of the service that would conform to the political beliefs, taste, and personalities of users.

But even that poses real technological challenges, according to people who work at OpenAI, and risks that ChatGPT could create something akin to the “filter bubbles” we’ve seen on social media.

For now, ChatGPT isn’t presenting itself as a way to find answers to serious questions. Its answers to factual inquiries, biased or otherwise, can’t be taken seriously. The AI is very good at sounding human, but it has trouble with math, gets basic facts wrong, and often just makes stuff up — a tendency people inside OpenAI refer to as “hallucinating.”

ChatGPT has said in different responses that the world record holder for racing across the English channel on foot is George Reiff, Yannick Bourseaux, Chris Bonnington or Piotr Kurylo. None of those people are real and, as you might already know, nobody has ever walked across the English channel.

Unlike on social media, where the most divisive and sensational content is programmed to spread faster and reach the widest audience, ChatGPT’s answers are sent only to one individual at a time.

From a political bias standpoint, ChatGPT’s answers are about as consequential as a Magic 8 Ball.

The worry — and it’s an understandable one — is that one day, ChatGPT will become extremely accurate, stop hallucinating, and become the most trusted place to look up basic information, replacing Google and Wikipedia as the most common research tools used by most people.

That’s not a foregone conclusion. The development of AI does not follow a linear trajectory like Moore’s Law — the name for the steady and predictable shrinking of computer chips over time.

There are AI experts who believe the technology that underlies ChatGPT will never be able to reliably spit out accurate results. And if that never happens, it won’t be a very effective political pundit, biased or not. (SOURCE)

I posted this on social media almost a month ago (Feb. 9):

One aspect to the evident political bias in AI technologies like ChatGPT that the article doesn’t consider is the rankings that Google places on websites, and website authority in general, and how the favoritism of some digital platforms enables them to gain SEO advantages, which could cause the AI to favor those websites.

Personally, I suspect that Google’s website rankings affect the AI’s choices in source material—thus leading to political bias.

But that’s too easy of an explanation.

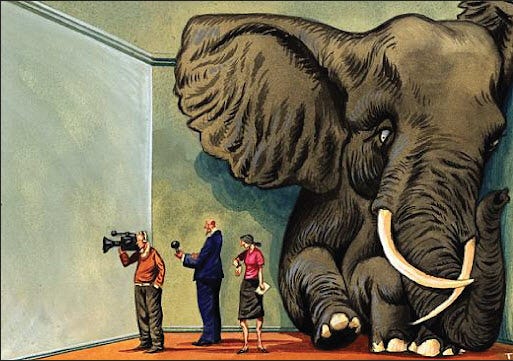

Elephant in the Room

TERRIBLE: Professor “Unexpectedly” DIES One Week After Getting Booster

Healthy mother-of-two, 32, collapsed and died from brain bleed while she led fitness bounce class

Heartbreaking: 2 weeks after the Pfizer death shot

a once healthy father is now on life support.