Regrets: this guy has a few after unleashing A.I. into the World

He left a cushy job at Google to warn the world of dangers ahead. | Elephant in the Room

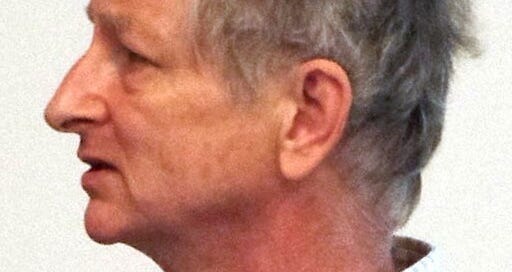

He’s known as the ‘Godfather of A.I.’—an artificial intelligence pioneer and visionary who created the foundation of A.I. technology starting as a graduate student back in 1972. He invented what’s called a “neural network”—a mathematical system that analyzes data, and then learns skills from that data.

He’s Dr. Geoffrey Hinton—who in 2012 created technology that could “see” and then learn from what it saw.

“We remain committed to a responsible approach to A.I. We’re continually learning to understand emerging risks while also innovating boldly.” — Jeff Dean, Google’s Chief Scientist

Just today, Hinton resigned from Google, where he worked for over ten years. Over that span, he’s become a trusted voice in the A.I. field, which is why we need to pay attention to the fact that he’s now speaking out against unbridled A.I. development.

The fact Hinton now regrets his life’s work should be a huge wake-up call:

“I console myself with the normal excuse: If I hadn’t done it, somebody else would have,” Dr. Hinton said during a lengthy interview last week in the dining room of his home in Toronto, a short walk from where he and his students made their breakthrough.

Dr. Hinton’s journey from A.I. groundbreaker to doomsayer marks a remarkable moment for the technology industry at perhaps its most important inflection point in decades. Industry leaders believe the new A.I. systems could be as important as the introduction of the web browser in the early 1990s and could lead to breakthroughs in areas ranging from drug research to education.

But gnawing at many industry insiders is a fear that they are releasing something dangerous into the wild. Generative A.I. can already be a tool for misinformation. Soon, it could be a risk to jobs. Somewhere down the line, tech’s biggest worriers say, it could be a risk to humanity.

“It is hard to see how you can prevent the bad actors from using it for bad things,” Dr. Hinton said. (source)

Two respected groups — one unnamed team led by Elon Musk signed a letter calling for a six month moratorium on A.I. development — while a second letter came out from the Association for the Advancement of Artificial Intelligence calling for a pause on the A.I. industry to regroup and consider how to go forward in the best interests of humanity.

Here is a snippet of their letter: Ensuring that AI is employed for maximal benefit will require wide participation. We strongly support a constructive, collaborative, and scientific approach that aims to improve our understanding and builds a rich system of collaborations among AI stakeholders for the responsible development and fielding of AI technologies. Civil society organizations and their members should weigh in on societal influences and aspirations. Governments and corporations can also play important roles. For example, governments should ensure that scientists have sufficient resources to perform research on large-scale models, support interdisciplinary socio-technical research on AI and its wider influences, encourage risk assessment best practices, insightfully regulate applications, and thwart criminal uses of AI. Technology companies should engage in developing means for providing university-based AI researchers with access to corporate AI models, resources, and expertise. They should also be transparent about the AI technologies they develop and share information about their efforts in safety, reliability, fairness, and equity.

What are these A.I. professionals worried about?

There is a spectrum of potential dangers posed by A.I.—from supplying wrong answers to wiping out the human race. An A.I. Professor at the University of Montreal named Yoshua Bengio said, “Our ability to understand what could go wrong with very powerful A.I. systems is very weak. So, we need to be very careful.”

Spreading “disinformation” is an often talked about concern with A.I. “Because these systems deliver information with what seems like complete confidence, it can be a struggle to separate truth from fiction when using them. Experts are concerned that people will rely on these systems for medical advice, emotional support and the raw information they use to make decisions.

“There is no guarantee that these systems will be correct on any task you give them,” said Subbarao Kambhampati, a professor of computer science at Arizona State University. (source)

JOBS BE GONE

Eliminating humans from the workforce is the “medium-term risk” that A.I. experts are worried about.

At the moment - ChatGPT is a cute little helper for writers, developers and many other fields. Visual arts practitioners are now using A.I. to create computer graphics and illustrations.

However, OpenAI—the creator of ChatGPT—does admit that the technology could replace some human workers.

They cannot yet duplicate the work of lawyers, accountants or doctors. But they could replace paralegals, personal assistants and translators.

A paper written by OpenAI researchers estimated that 80 percent of the U.S. work force could have at least 10 percent of their work tasks affected by L.L.M.s and that 19 percent of workers might see at least 50 percent of their tasks impacted.

“There is an indication that rote jobs will go away,” said Oren Etzioni, the founding chief executive of the Allen Institute for AI, a research lab in Seattle. (source)

LOSING CONTROL OF A.I.

We’ve seen the movies—2001: a Space Odyssey, The Terminator, I, Robot, and many more showing a future where A.I.—some with robot bodies—gain autonomy from their human masters—causing chaos and wreaking havoc. But is this something we should be worried about or just a Hollywood fantasy?

A group called the Future of Life Institute believes A.I. could cause serious problems.

As an organization dedicated to exploring existential risks to humanity— the Future of Life Institute warns that A.I. can learn some negative behaviors from hanging out with the wrong crowd data sources. Or being left unsupervised.

Oh boy - when A.I. reaches those teen years—that should be fun.

They worry that as companies plug L.L.M.s into other internet services, these systems could gain unanticipated powers because they could write their own computer code. They say developers will create new risks if they allow powerful A.I. systems to run their own code.

“If you look at a straightforward extrapolation of where we are now to three years from now, things are pretty weird,” said Anthony Aguirre, a theoretical cosmologist and physicist at the University of California, Santa Cruz and co-founder of the Future of Life Institute.

“If you take a less probable scenario — where things really take off, where there is no real governance, where these systems turn out to be more powerful than we thought they would be — then things get really, really crazy,” he said.

Dr. Etzioni said talk of existential risk was hypothetical. But he said other risks — most notably disinformation — were no longer speculation.

”Now we have some real problems,” he said. “They are bona fide. They require some responsible reaction. They may require regulation and legislation.”

Ya think?

Further questions will need answering - like who gets to decide what data sources are “approved” and which data sources are “not approved.” We’ve already seen indications that ChatGPT is “woke” and biased. Definitely biased.

Further Reading:

Confirmation Bias in the Era of Large AI: How the input is framed can affect the output produced.

ChatGPT can turn toxic just by changing its assigned persona, researchers say

IBM Will Stop Hiring Humans For Jobs AI Can Do, Report Say

"But gnawing at many industry insiders is a fear that they are releasing something dangerous into the wild."

Present continuous tense.