The Sickos Favorite Social Media

We've known this since Pizzagate, haven't we? So why hasn't this been cleaned up yet?

Well, I’ll be…

I’m shocked—SHOCKED, I tell ya!

Can you believe that Instagram—that popular social media platform filled with narcissist selfies and rented lambos is a popular hangout for pedophiles?!

Okay, yes, I’m exaggerating my shock level.

Some of us—many subscribers to News Detectives, I’m sure—have known this for five or six years—around the time the Pizzagate scandal broke, somewhere in the middle of Trump’s term. You may recall the name James Alifantis and his disgusting IG account.

However, maybe we should be shocked?

Because this goes much deeper than a few pedos using code words and emojis to communicate their twisted lusts on the IG.

What we are discovering now is that Instagram’s algorithm “helps connect and promote a vast network of accounts openly devoted to the commission and purchase of underage-sex content, according to investigations by The Wall Street Journal and researchers at Stanford University and the University of Massachusetts Amherst.” (source)

To put it more plainly, a pedophile on IG will get recommendations from the IG algorithm to other accounts catering to pedophiles.

Pedophiles have long used the internet, but unlike the forums and file-transfer services that cater to people who have interest in illicit content, Instagram doesn’t merely host these activities. Its algorithms promote them. Instagram connects pedophiles and guides them to content sellers via recommendation systems that excel at linking those who share niche interests, the Journal and the academic researchers found.

Though out of sight for most on the platform, the sexualized accounts on Instagram are brazen about their interest. The researchers found that Instagram enabled people to search explicit hashtags such as #pedowhore and #preteensex and connected them to accounts that used the terms to advertise child-sex material for sale. Such accounts often claim to be run by the children themselves and use overtly sexual handles incorporating words such as “little slut for you.”

Instagram accounts offering to sell illicit sex material generally don’t publish it openly, instead posting “menus” of content. Certain accounts invite buyers to commission specific acts. Some menus include prices for videos of children harming themselves and “imagery of the minor performing sexual acts with animals,” researchers at the Stanford Internet Observatory found. At the right price, children are available for in-person “meet ups.”

The promotion of underage-sex content violates rules established by Meta as well as federal law.

In response to questions from the Journal, Meta acknowledged problems within its enforcement operations and said it has set up an internal task force to address the issues raised. “Child exploitation is a horrific crime,” the company said, adding, “We’re continuously investigating ways to actively defend against this behavior.”

Meta said it has in the past two years taken down 27 pedophile networks and is planning more removals. Since receiving the Journal queries, the platform said it has blocked thousands of hashtags that sexualize children, some with millions of posts, and restricted its systems from recommending users search for terms known to be associated with sex abuse. It said it is also working on preventing its systems from recommending that potentially pedophilic adults connect with one another or interact with one another’s content.

Alex Stamos, the head of the Stanford Internet Observatory and Meta’s chief security officer until 2018, said that getting even obvious abuse under control would likely take a sustained effort.

“That a team of three academics with limited access could find such a huge network should set off alarms at Meta,” he said, noting that the company has far more effective tools to map its pedophile network than outsiders do. “I hope the company reinvests in human investigators,” he added.

Technical and legal hurdles make determining the full scale of the network hard for anyone outside Meta to measure precisely.

Because the laws around child-sex content are extremely broad, investigating even the open promotion of it on a public platform is legally sensitive.

In its reporting, the Journal consulted with academic experts on online child safety. Stanford’s Internet Observatory, a division of the university’s Cyber Policy Center focused on social-media abuse, produced an independent quantitative analysis of the Instagram features that help users connect and find content.

The Journal also approached UMass’s Rescue Lab, which evaluated how pedophiles on Instagram fit into the larger ecosystem of online child exploitation. Using different methods, both entities were able to quickly identify large-scale communities promoting criminal sex abuse.

Test accounts set up by researchers that viewed a single account in the network were immediately hit with “suggested for you” recommendations of purported child-sex-content sellers and buyers, as well as accounts linking to off-platform content trading sites. Following just a handful of these recommendations was enough to flood a test account with content that sexualizes children.

The Stanford Internet Observatory used hashtags associated with underage sex to find 405 sellers of what researchers labeled “self-generated” child-sex material—or accounts purportedly run by children themselves, some saying they were as young as 12. According to data gathered via Maltego, a network mapping software, 112 of those seller accounts collectively had 22,000 unique followers.

Underage-sex-content creators and buyers are just a corner of a larger ecosystem devoted to sexualized child content. Other accounts in the pedophile community on Instagram aggregate pro-pedophilia memes, or discuss their access to children. Current and former Meta employees who have worked on Instagram child-safety initiatives estimate the number of accounts that exist primarily to follow such content is in the high hundreds of thousands, if not millions.

A Meta spokesman said the company actively seeks to remove such users, taking down 490,000 accounts for violating its child safety policies in January alone.

“Instagram is an on ramp to places on the internet where there’s more explicit child sexual abuse,” said Brian Levine, director of the UMass Rescue Lab, which researches online child victimization and builds forensic tools to combat it. Levine is an author of a 2022 report for the National Institute of Justice, the Justice Department’s research arm, on internet child exploitation.

Instagram, estimated to have more than 1.3 billion users, is especially popular with teens. The Stanford researchers found some similar sexually exploitative activity on other, smaller social platforms, but said they found that the problem on Instagram is particularly severe. “The most important platform for these networks of buyers and sellers seems to be Instagram,” they wrote in a report slated for release on June 7.

More from the Wall Street Journal report:

In many cases, Instagram has permitted users to search for terms that its own algorithms know may be associated with illegal material. In such cases, a pop-up screen for users warned that “These results may contain images of child sexual abuse,” and noted that production and consumption of such material causes “extreme harm” to children. The screen offered two options for users: “Get resources” and “See results anyway.”

In response to questions from the Journal, Instagram removed the option for users to view search results for terms likely to produce illegal images. The company declined to say why it had offered the option.

The pedophilic accounts on Instagram mix brazenness with superficial efforts to veil their activity, researchers found. Certain emojis function as a kind of code, such as an image of a map—shorthand for “minor-attracted person”—or one of “cheese pizza,” which shares its initials with “child pornography,” according to Levine of UMass. Many declare themselves “lovers of the little things in life.”

Accounts identify themselves as “seller” or “s3ller,” and many state their preferred form of payment in their bios. These seller accounts often convey the child’s purported age by saying they are “on chapter 14,” or “age 31” followed by an emoji of a reverse arrow.

Some of the accounts bore indications of sex trafficking, said Levine of UMass, such as one displaying a teenager with the word WHORE scrawled across her face.

Some users claiming to sell self-produced sex content say they are “faceless”—offering images only from the neck down—because of past experiences in which customers have stalked or blackmailed them. Others take the risk, charging a premium for images and videos that could reveal their identity by showing their face.

Many of the accounts show users with cutting scars on the inside of their arms or thighs, and a number of them cite past sexual abuse.

Even glancing contact with an account in Instagram’s pedophile community can trigger the platform to begin recommending that users join it.

An algorithm so tightly wound should make it easier for Meta to cleanup this pedo mess, you would think. But so far their efforts appear to be lackluster.

As with most social-media platforms, the core of Instagram’s recommendations are based on behavioral patterns, not by matching a user’s interests to specific subjects. This approach is efficient in increasing the relevance of recommendations, and it works most reliably for communities that share a narrow set of interests.

In theory, this same tightness of the pedophile community on Instagram should make it easier for Instagram to map out the network and take steps to combat it. Documents previously reviewed by the Journal show that Meta has done this sort of work in the past to suppress account networks it deems harmful, such as with accounts promoting election delegitimization in the U.S. after the Jan. 6 Capitol riot.

Like other platforms, Instagram says it enlists its users to help detect accounts that are breaking rules. But those efforts haven’t always been effective.

Sometimes user reports of nudity involving a child went unanswered for months, according to a review of scores of reports filed over the last year by numerous child-safety advocates.

Earlier this year, an anti-pedophile activist discovered an Instagram account claiming to belong to a girl selling underage-sex content, including a post declaring, “This teen is ready for you pervs.” When the activist reported the account, Instagram responded with an automated message saying: “Because of the high volume of reports we receive, our team hasn’t been able to review this post.”

After the same activist reported another post, this one of a scantily clad young girl with a graphically sexual caption, Instagram responded, “Our review team has found that [the account’s] post does not go against our Community Guidelines.” The response suggested that the user hide the account to avoid seeing its content.

A Meta spokesman acknowledged that Meta had received the reports and failed to act on them. A review of how the company handled reports of child sex abuse found that a software glitch was preventing a substantial portion of user reports from being processed, and that the company’s moderation staff wasn’t properly enforcing the platform’s rules, the spokesman said. The company said it has since fixed the bug in its reporting system and is providing new training to its content moderators.

Even when Instagram does take down accounts selling underage-sex content, they don’t always stay gone.

If you are wondering what to do with this information, one obvious response is to stop using Instagram. That’s reasonable and you won’t get any objections from me on that decision. Go for it.

One thing we all could do to be a little more proactive is to call your Congresssional representatives and tell them your concerns about IG and ask them to do something about it—for the sake of the children being abused.

Got another solution to getting pedos off of IG? Tell us in the comments.

Come on Zuck, get your act together—or people might start noticing who your platform is placating, and it makes you look sus.

Elephant in the Room

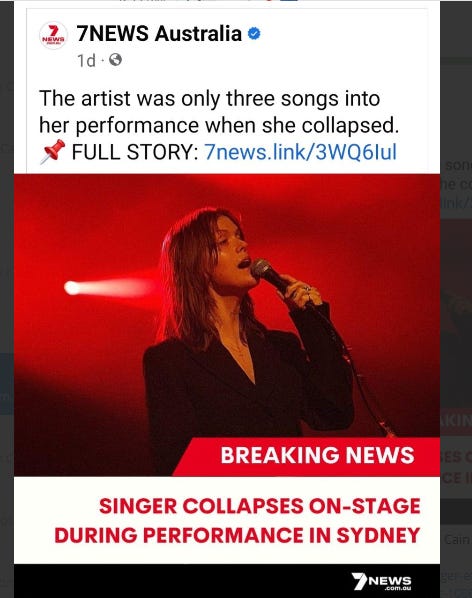

1. “Is the evidence there to say 'causation'? YES! Singapore Airlines moved early to be the globe's first fully vaccinated airline! they fell for the vaccine fraud! must have D-dimers, troponin, chest MRI”

3.

4.

5. Her stage name is Ethel Cain